Improve experince with Kubernetes ...

Now days, trending concepts of the DevOps are containerization, microservices. Let's walk with them in this blog article.

Containers

Container management system...

Let's move for microservices...

Docker comes to the table..

Why Kubernetes?

Are Docker & Kurbernetes same?

No...

Kubernetes Features

Service discovery and load balancing:

Automatic binpacking:

Storage orchestration:

Self-healing:

Automated rollouts and rollbacks:

Secret and configuration management:

Batch execution:

Horizontal scaling:

Containers

Containers are an abstraction at the app layer that packages code and dependencies together. Multiple containers can run on the same machine and share the OS kernel with other containers, each running as isolated processes in user space. Containers take up less space than VMs (container images are typically tens of MBs in size), can handle more applications and require fewer VMs and Operating systems.

A container is a standard unit of software that packages up code and all its dependencies so the application runs quickly and reliably from one computing environment to another. A container image is a lightweight, standalone, executable package of software that includes everything needed to run an application: code, runtime, system tools, system libraries and settings.

|

| Virtual machine VS containers |

The key idea of microservices is that some types of applications become easier to build and maintain when they are broken down into smaller, composable pieces which work together. Each component is continuously developed and separately maintained, and the application is then simply the sum of its constituent components. This is in contrast to a traditional, "monolithic" application which is all developed all in one piece.

Applications built as a set of modular components are easier to understand, easier to test, and most importantly easier to maintain over the life of the application. It enables organizations to achieve much higher agility and be able to vastly improve the time it takes to get working improvements to production.

|

| Containers on Docker |

Docker has enabled developers to use containers when working on any application -whether is a new microservice or an existing application. Containers package up the code, configs and dependencies into an isolated bundle, potentially making the application more secure and portable. However, when you need to manage an entire portfolio of applications, containers alone are not enough as they do not directly address the compliance, security and operational governance needs of your organization.

For more details please refer my Docker article.

|

| Docker logo |

For more details please refer my Docker article.

Kubernetes provides a container-centric management environment. It orchestrates computing, networking, and storage infrastructure on behalf of user workloads. This provides much of the simplicity of Platform as a Service (PaaS) with the flexibility of Infrastructure as a Service (IaaS), and enables portability across infrastructure providers.

|

| Kubernetes Logo |

No...

From a distance, Docker and Kubernetes can appear to be similar technologies; they both help you run applications within linux containers. If you look a little closer, you’ll find that the technologies operate at different layers of the stack, and can even be used together.

Docker helps you create and deploy software within containers. It’s an open source collection of tools that help you to build, ship, and run any app, anywhere.

Kubernetes is an open source container orchestration platform, allowing large numbers of containers to work together in harmony, reducing operational burden. It works like running containers, scaling up or down by adding or removing containers, keeping multiple consistent with multiple instances of an application, distributing load between the containers and launching new containers on different machines if something fails.

Service discovery and load balancing:

- No need to modify your application to use an unfamiliar service discovery mechanism. Kubernetes gives containers their own IP addresses and a single DNS name for a set of containers, and can load-balance across them.

Automatic binpacking:

- Automatically places containers based on their resource requirements and other constraints, while not sacrificing availability. Mix critical and best-effort workloads in order to drive up utilization and save even more resources.

Storage orchestration:

- Automatically mount the storage system of your choice, whether from local storage, a public cloud provider such as GCP or AWS, or a network storage system such as NFS, iSCSI, Gluster, Ceph, Cinder, or Flocker.

Self-healing:

- Restarts containers that fail, replaces and reschedules containers when nodes die, kills containers that don’t respond to your user-defined health check, and doesn’t advertise them to clients until they are ready to serve.

Automated rollouts and rollbacks:

- Kubernetes progressively rolls out changes to your application or its configuration, while monitoring application health to ensure it doesn’t kill all your instances at the same time. If something goes wrong, Kubernetes will rollback the change for you. Take advantage of a growing ecosystem of deployment solutions.

Secret and configuration management:

- Deploy and update secrets and application configuration without rebuilding your image and without exposing secrets in your stack configuration.

Batch execution:

- In addition to services, Kubernetes can manage your batch and CI workloads, replacing containers that fail, if desired.

Horizontal scaling:

- Scale your application up and down with a simple command, with a UI, or automatically based on CPU usage.

Docker with Kubernetes

As above mentioned, Docker and Kubernetes work at different levels. Under the hood, Kubernetes can integrate with the Docker engine to coordinate the scheduling and execution of Docker containers on Kubelets. The Docker engine itself is responsible for running the actual container image built by running ‘docker build’. Higher level concepts such as service-discovery, loadbalancing and network policies are handled by Kubernetes as well.

When used together, both Docker and Kubernetes are great tools for developing a modern cloud architecture, but they are fundamentally different at their core. It is important to understand the high-level differences between the technologies when building your stack.

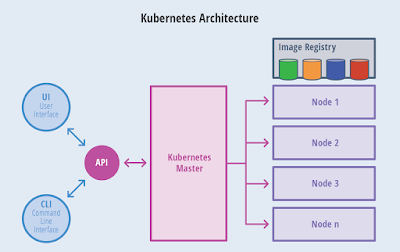

Kubernetes Architecture

Master : Master is the main controlling unit of the Kubernetes cluster. It is the main management contact point for administrator.

Node/ Worker Minion: In Kubernetes the server which actually perform work is the worker node. This is where containers are deployed.

Pod : Pods are the basic deployment unit in Kubernetes. Kubernetes defines a pod as a group of “closely related containers” and pod can have multiple containers.

The Kubernetes Master is a collection of three processes that run on a single node in your cluster, which is designated as the master node. Those processes are: kube-apiserver, kube-controller-manager and kube-scheduler. Each individual non-master node in your cluster runs two processes:

|

| Docker with Kubernetes |

Kubernetes Architecture

|

| High Level Kubernetes Architecture |

Node/ Worker Minion: In Kubernetes the server which actually perform work is the worker node. This is where containers are deployed.

Pod : Pods are the basic deployment unit in Kubernetes. Kubernetes defines a pod as a group of “closely related containers” and pod can have multiple containers.

The Kubernetes Master is a collection of three processes that run on a single node in your cluster, which is designated as the master node. Those processes are: kube-apiserver, kube-controller-manager and kube-scheduler. Each individual non-master node in your cluster runs two processes:

- kubelet, which communicates with the Kubernetes Master

- kube-proxy, a network proxy which reflects Kubernetes networking services on each node

Kubeadm: Kubeadm automates the installation and configuration of Kubernetes components such as the API server, Controller Manager, and Kube DNS. It does not, however, create users or handle the installation of operating-system-level dependencies and their configuration

|

| Complex Architecture of Kubernetes |

Create a Kubernetes Cluster with one master node and two slave nodes...

Prerequisites

- SSH key pair on local linux machine

- Three servers running Ubuntu 18.04 with at least 1GB RAM. If not it is better to use three Docker containers. Please refer my blog article to install Docker containers.

- Ansible installed on the local machine.

Step 01: Setting Up the Workspace Directory and Ansible Inventory File

- IP of the master machine will be as master_ip and other IPs of slaves will be slave_1_ip and slave_2_ip.

- Make a workspace in your local machine and name as "kube-cluster", the directory. Use below commands.

$ mkdir ~/kube-cluster

$ cd ~/kube-cluster

- Create a file named called host and open it with nano command.

$ nano ~/kube-cluster/hosts

- Add the following content to the host file and save.

[masters]

master ansible_host=master_ip ansible_user=root

[workers]

worker1 ansible_host=slave_1_ip ansible_user=root

worker2 ansible_host=slave_2_ip ansible_user=root

[all:vars]

ansible_python_interpreter=/usr/bin/python3

- Those are the details we include to that inventory file and it specifies basic server configurations.

- Save and close the file.

Step 02:Creating a Non-Root User on All Remote Servers

- hosts: all

become: yes

tasks:

- name: create the 'ubuntu' user

user: name=ubuntu append=yes state=present createhome=yes shell=/bin/bash

- name: allow 'ubuntu' to have passwordless sudo

lineinfile:

dest: /etc/sudoers

line: 'ubuntu ALL=(ALL) NOPASSWD: ALL'

validate: 'visudo -cf %s'

- name: set up authorized keys for the ubuntu user

authorized_key: user=ubuntu key="{{item}}"

with_file:

- ~/.ssh/id_rsa.pub

- It needs to create a non-root user with sudo privileges on all servers so that you can SSH into them manually as an unprivileged user.

- By using a non-root user for such tasks minimizes the risk of modifying or deleting important files or unintentionally performing other dangerous operations.

- Create a file called initial.yml in the workspace, by typing

$ nano ~/kube-cluster/initial.yml

- Add the following play to the file to create a non-root user with sudo privileges on all of the servers.

- hosts: all

become: yes

tasks:

- name: create the 'ubuntu' user

user: name=ubuntu append=yes state=present createhome=yes shell=/bin/bash

- name: allow 'ubuntu' to have passwordless sudo

lineinfile:

dest: /etc/sudoers

line: 'ubuntu ALL=(ALL) NOPASSWD: ALL'

validate: 'visudo -cf %s'

- name: set up authorized keys for the ubuntu user

authorized_key: user=ubuntu key="{{item}}"

with_file:

- ~/.ssh/id_rsa.pub

- By adding those, it makes a non root user called ubuntu without passwords and making allowance to remote SSH within servers.

- Save and close the file, then execute the play book by typing below command.

$ ansible-playbook -i hosts ~/kube-cluster/initial.yml

- After couple of minutes, it will be completed. As well as output will be as below.

PLAY [all] ****

TASK [Gathering Facts] ****

ok: [master]

ok: [worker1]

ok: [worker2]

TASK [create the 'ubuntu' user] ****

changed: [master]

changed: [worker1]

changed: [worker2]

TASK [allow 'ubuntu' user to have passwordless sudo] ****

changed: [master]

changed: [worker1]

changed: [worker2]

TASK [set up authorized keys for the ubuntu user] ****

changed: [worker1] => (item=ssh-rsa AAAAB3...)

changed: [worker2] => (item=ssh-rsa AAAAB3...)

changed: [master] => (item=ssh-rsa AAAAB3...)

PLAY RECAP ****

master : ok=5 changed=4 unreachable=0 failed=0

worker1 : ok=5 changed=4 unreachable=0 failed=0

worker2 : ok=5 changed=4 unreachable=0 failed=0

- After that preliminary set up is completed.

Step 03:Installing Kubernetetes' Dependencies

- It needs to install Docker, kubeadm, kubelet, kubectl.

- Create a file called kube-dependencies.yml by typing below command.

$ nano ~/kube-cluster/kube-dependencies.yml

- Add the following content to the file, to install main dependencies.

- hosts: all

become: yes

tasks:

- name: install Docker

apt:

name: docker.io

state: present

update_cache: true

- name: install APT Transport HTTPS

apt:

name: apt-transport-https

state: present

- name: add Kubernetes apt-key

apt_key:

url: https://packages.cloud.google.com/apt/doc/apt-key.gpg

state: present

- name: add Kubernetes' APT repository

apt_repository:

repo: deb http://apt.kubernetes.io/ kubernetes-xenial main

state: present

filename: 'kubernetes'

- name: install kubelet

apt:

name: kubelet=1.11.7-00

state: present

update_cache: true

- name: install kubeadm

apt:

name: kubeadm=1.11.7-00

state: present

- hosts: master

become: yes

tasks:

- name: install kubectl

apt:

name: kubectl=1.11.7-00

state: present

force: yes

- After that execute the playbook by typing, below command

$ ansible-playbook -i hosts ~/kube-cluster/kube-dependencies.yml

- After execution completed, it will be presented like this,

PLAY [all] ****

TASK [Gathering Facts] ****

ok: [worker1]

ok: [worker2]

ok: [master]

TASK [install Docker] ****

changed: [master]

changed: [worker1]

changed: [worker2]

TASK [install APT Transport HTTPS] *****

ok: [master]

ok: [worker1]

changed: [worker2]

TASK [add Kubernetes apt-key] *****

changed: [master]

changed: [worker1]

changed: [worker2]

TASK [add Kubernetes' APT repository] *****

changed: [master]

changed: [worker1]

changed: [worker2]

TASK [install kubelet] *****

changed: [master]

changed: [worker1]

changed: [worker2]

TASK [install kubeadm] *****

changed: [master]

changed: [worker1]

changed: [worker2]

PLAY [master] *****

TASK [Gathering Facts] *****

ok: [master]

TASK [install kubectl] ******

ok: [master]

PLAY RECAP ****

master : ok=9 changed=5 unreachable=0 failed=0

worker1 : ok=7 changed=5 unreachable=0 failed=0

worker2 : ok=7 changed=5 unreachable=0 failed=0

- After that all kind of basic dependencies are installed.

Step 04:Setting Up the Master Node

- Here it needs to setup Pods and Network plugins on the master machine.

- Create an Ansible playbook called, master.yml by typing,

$ nano ~/kube-cluster/master.yml

- Add the following content to the play to initialize the cluster and install Flannel:

- hosts: master

become: yes

tasks:

- name: initialize the cluster

shell: kubeadm init --pod-network-cidr=10.244.0.0/16 >> cluster_initialized.txt

args:

chdir: $HOME

creates: cluster_initialized.txt

- name: create .kube directory

become: yes

become_user: ubuntu

file:

path: $HOME/.kube

state: directory

mode: 0755

- name: copy admin.conf to user's kube config

copy:

src: /etc/kubernetes/admin.conf

dest: /home/ubuntu/.kube/config

remote_src: yes

owner: ubuntu

- name: install Pod network

become: yes

become_user: ubuntu

shell: kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/v0.9.1 /Docume ntation/kube-flannel.yml >> pod_network_setup.txt

args:

chdir: $HOME

creates: pod_network_setup.txt

- It will be defined a private subnet with 10.244.0.0/16

- Here it was included some of directory making and object description of cluster.

- Save and exit the file, then execute the playbook by typing below command,

$ ansible-playbook -i hosts ~/kube-cluster/master.yml

- When you completed the execution, the result will be like below,

PLAY [master] ****

TASK [Gathering Facts] ****

ok: [master]

TASK [initialize the cluster] ****

changed: [master]

TASK [create .kube directory] ****

changed: [master]

TASK [copy admin.conf to user's kube config] *****

changed: [master]

TASK [install Pod network] *****

changed: [master]

PLAY RECAP ****

master : ok=5 changed=4 unreachable=0 failed=0

- Then it needs to check the status of master node by making a SSH command

$ ssh ubuntu@master_ip

- Then execute the below command on the master node

$ kubectl get nodes

- Then ouput will be as below,

NAME STATUS ROLES AGE VERSION

master Ready master 1d v1.11.7

- Master can start accepting worker nodes and executing tasks sent to the API Server. You can now add the workers from your local machine.

Step 05: Setting Up the Worker Nodes

- Adding workers to the cluster involves executing a single command on each. This command includes the necessary cluster information, such as the IP address and port of the master's API Server, and a secure token. Only nodes that pass in the secure token will be able join the cluster.

- Create a worker.yml file in the working space by typing below command,

$ nano ~/kube-cluster/workers.yml

- Add the below content to the file,

- hosts: master

become: yes

gather_facts: false

tasks:

- name: get join command

shell: kubeadm token create --print-join-command

register: join_command_raw

- name: set join command

set_fact:

join_command: "{{ join_command_raw.stdout_lines[0] }}"

- hosts: workers

become: yes

tasks:

- name: join cluster

shell: "{{ hostvars['master'].join_command }} >> node_joined.txt"

args:

chdir: $HOME

creates: node_joined.txt

- The first play gets the join command that needs to be run on the worker nodes. This command will be in the following format: kubeadm join --token <token> <master-ip>:<master-port> --discovery-token-ca-cert-hash sha256:<hash>. Once it gets the actual command with the proper token and hash values, the task sets it as a fact so that the next play will be able to access that info.

- Save and close the file. Then execute the play by tying below,

$ ansible-playbook -i hosts ~/kube-cluster/workers.yml

- The output will be as below,

PLAY [master] ****

TASK [get join command] ****

changed: [master]

TASK [set join command] *****

ok: [master]

PLAY [workers] *****

TASK [Gathering Facts] *****

ok: [worker1]

ok: [worker2]

TASK [join cluster] *****

changed: [worker1]

changed: [worker2]

PLAY RECAP *****

master : ok=2 changed=1 unreachable=0 failed=0

worker1 : ok=2 changed=1 unreachable=0 failed=0

worker2 : ok=2 changed=1 unreachable=0 failed=0

- With the addition of the worker nodes, your cluster is now fully set up and functional, with workers ready to run workloads. Before scheduling applications, let's verify that the cluster is working as intended.

Step 06:Cluster verification

- Due to some network issues, it makes some failure of the clusters. So need to check the status of the cluster by using SSH login to then server machines.

- Log to the master machine by typing, below command,

$ ssh ubuntu@master_ip

- Type below command to check the status of the clusters.

$ kubectl get nodes

- The output will be as below,

NAME STATUS ROLES AGE VERSION

master Ready master 1d v1.11.7

worker1 Ready <none> 1d v1.11.7

worker2 Ready <none> 1d v1.11.7

- If the status presents as the "READY", your setup is fully completed. If not and if it presents as "Not Ready", the configuration was not successfully completed.

Step 07: Running An Application on the Cluster

- To run application on the Kubernetes, it will be used an NGNIX application, which you know as a web sever.

- You can use the commands below for other containerized applications as well, provided you change the Docker image name and any relevant flags.

$ kubectl run nginx --image=nginx --port 80

- A deployment is a type of Kubernetes object that ensures there's always a specified number of pods running based on a defined template, even if the pod crashes during the cluster's lifetime. The above deployment will create a pod with one container from the Docker registry's Nginx Docker Image.

- To create the service, execute following command,

$ kubectl expose deploy nginx --port 80 --target-port 80 --type NodePort

- To see the running services, type as

$ kubectl get services

- The output will be as below,

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 1d

nginx NodePort 10.109.228.209 <none> 80:nginx_port/TCP 40m

- Use http://slave_1_ip:nginx_port or http://slave_2_ip:nginx_port to see the NGIX on browser.

- The output will as below image.

|

| NGNIX default page |

- If you need to delete the NGINX application, type as below,

$ kubectl delete service nginx

- Ensure that services are deleted, by typing

$ kubectl get services

- The output will be like below,

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 1d

If you are wondering with Kubernets, start next projects with Kubernets.

Comments

Post a Comment